Rendering Serlio: A Pedagogy of Visualization using Neural Networks

Contributors

Rendering Fiction

(Style Transfer and Rendering)

The discourses on language and drawing established by the classical architectural treatise find new disciplinary relevance in current advancements and discussions concerning machine learning. The Serlio Code1 , a body of research that examines the illustrated expositions of Sebastiano Serlio through the lens of artificial intelligence, provides one such example. The intention of the project is not simply to synthesize new images that recreate Serlio’s illustrations, but rather modulate their qualities and investigate their 2D to 3D translation beyond traditional rules of representation and orthographic projection. Architectural intelligence encoded in representation describes the ethos of an artistic endeavor, imposes severity and logic, and prepares forces and materials to create the architectural object. This project outlines a digital culture that integrates canonical architectural intelligence into a contemporary practice, producing a new form of agency and a new mode of dialogue between a designer and a particular precedent.

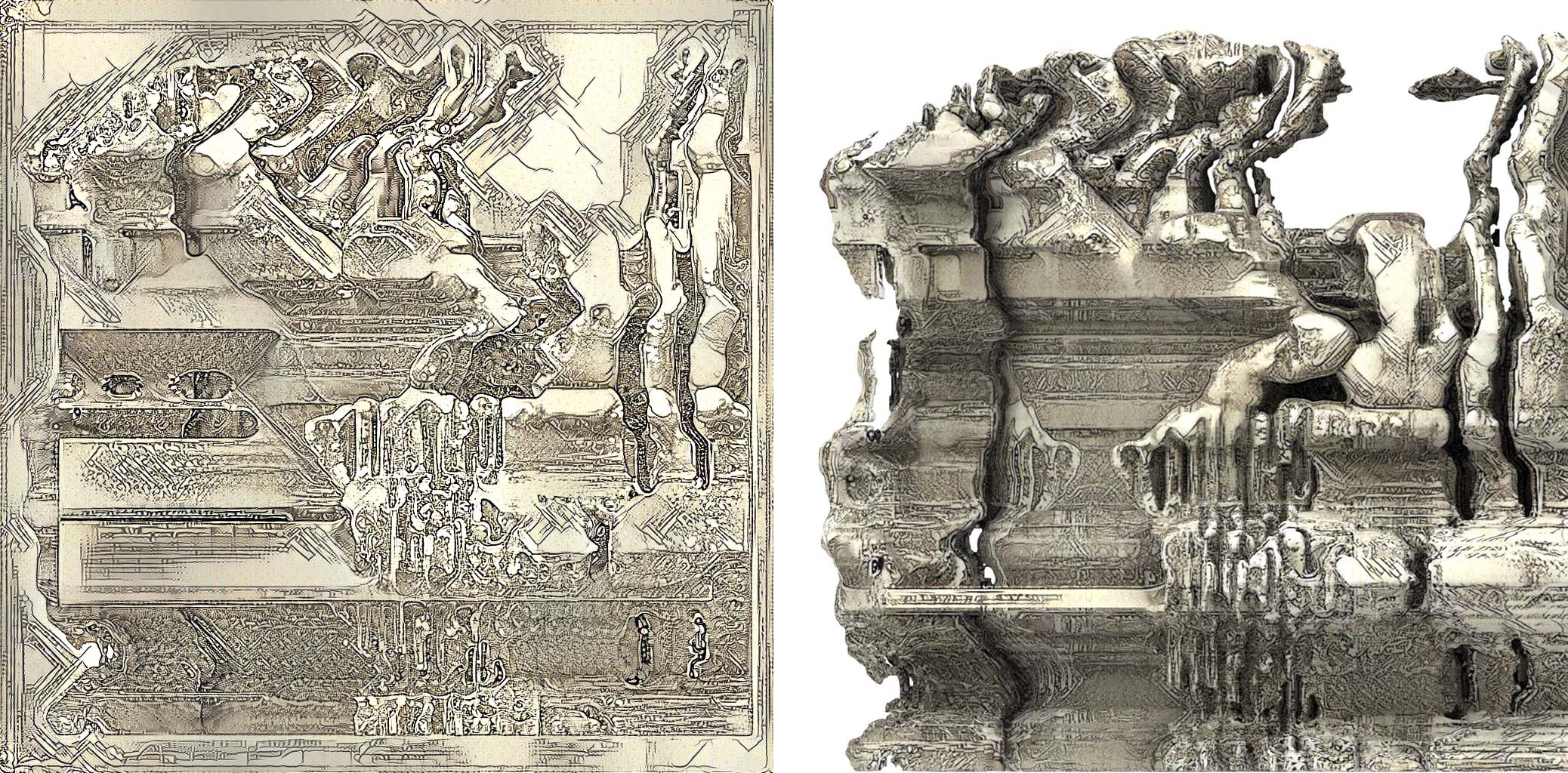

(Fig.1. Portico Latent Walk)

Image-based neural networks synthesize artificial images that are indistinguishable from authentic images. However, GANs2 can also operate diagrammatically by creating an exchange between continuous analogical modulation and codification of discrete digital units. Analog information (image input) and digital information (noise) are both synthesized by the discriminator then fed back into the system as new inputs. This process creates a continuous feedback loop transferring code into an analog pictorial flow of the image in each successive training cycle. In other words, the GAN renders a fictitious modulation of the analog and the digital through pictorial flux. (Fig.1)

Our representational tools continue to redefine our agency within the discipline, and this necessitates a reappraisal of the canon—like Serlio’s treatise—through a hyper-digital lens. How can the democratization of technological innovation bring new opportunities for agency, when we consider projects that use these neural networks, while visually impressive, often lack viable applications for the discipline? Creative adoption of neural networks does not only redefine the terms under which we make images, but also opens new aesthetic and social discourse. Therefore, it is necessary to augment existing strategies of AI through the layering or reapplication of these neural networks.

(Rendered object after several iterations)

We must start to question the way these tools begin to shape our visualization pedagogy. While representation starts with a subjective structure presupposed to be isomorphic or identical to that of the objects differentiated under unity, Deleuze generates the structure of the object out of pure difference itself. This includes both the a-subjective, differential ground of representation and the virtual, dynamic tendencies that inevitably transform it.3 If difference itself is what breaks representation from the presumed isomorphism of the original images and the new language, 2D to 3D procedures offer a way to communicate the project through virtual and fabricated objects as a way of producing this difference.

While this project operates in the realm of drawing as represented by Serlio’s treatises, the question of style transfer through UV maps breaks from the drawing at a certain point and engages the territory of rendering, a word used to denote an interpretation and translation. Within each process of style transfer and rendering there is a continuous flux of difference moving away from the original isomorphic condition, from drawing to rendering to object. When the representation is taken to a fabricated object there is a complete disruption of representation.

The Serlio Code is an important experiment for indexing and developing a design paradigm that sits squarely within our hyper-digital era. This approach to design speculates the translation of architectural intelligence to an artificial intelligence, and establishes the need for new visualization pedagogies before returning to the language of architecture. The architect gains a new kind of agency by using contemporary mechanisms of cultural production. Through the intentional collection of the data set, the selection of images used for the production of new objects, the process of three dimensionalization, the assembly of fragments, and the final translation to form, much of this territory remains uncharted.

Gabriel Esquivel is an associate professor at Texas A&M University, in the Department of Architecture. He is the director of the T4T Lab at Texas A&M University, and the founder of the Advanced Research Fabrication Lab about Robotic assisted fabrication and AI.

Shane Bugni is a student at Texas A&M university (‘22). Shane Bugni has been working on the AI research lab at Texas A&M for the past two years developing the course alongside Gabriel Esquivel. He has taught multiple international workshops including Digital Futures 2021

- Jean Jaminet, Gabriel Esquivel, Shane Bugni, The Serlio Code: Analog-to-Digital Information Processing in Architecture and Artificial Intelligence. ACADIA. 2021. ↩︎

- Generative Adversarial Networks ↩︎

- Henry Somers-Hall, Hegel, Deleuze, and the Critique of Representation: Dialectics of Negation and Difference (Albany: State University of New York Press, 2012), 289. ↩︎